CIMTech.ai

High performance processors for AI, everywhere

The first truly scalable and reliable analog in-memory computing

High performance processors for AI, everywhere

The first truly scalable and reliable analog in-memory computing

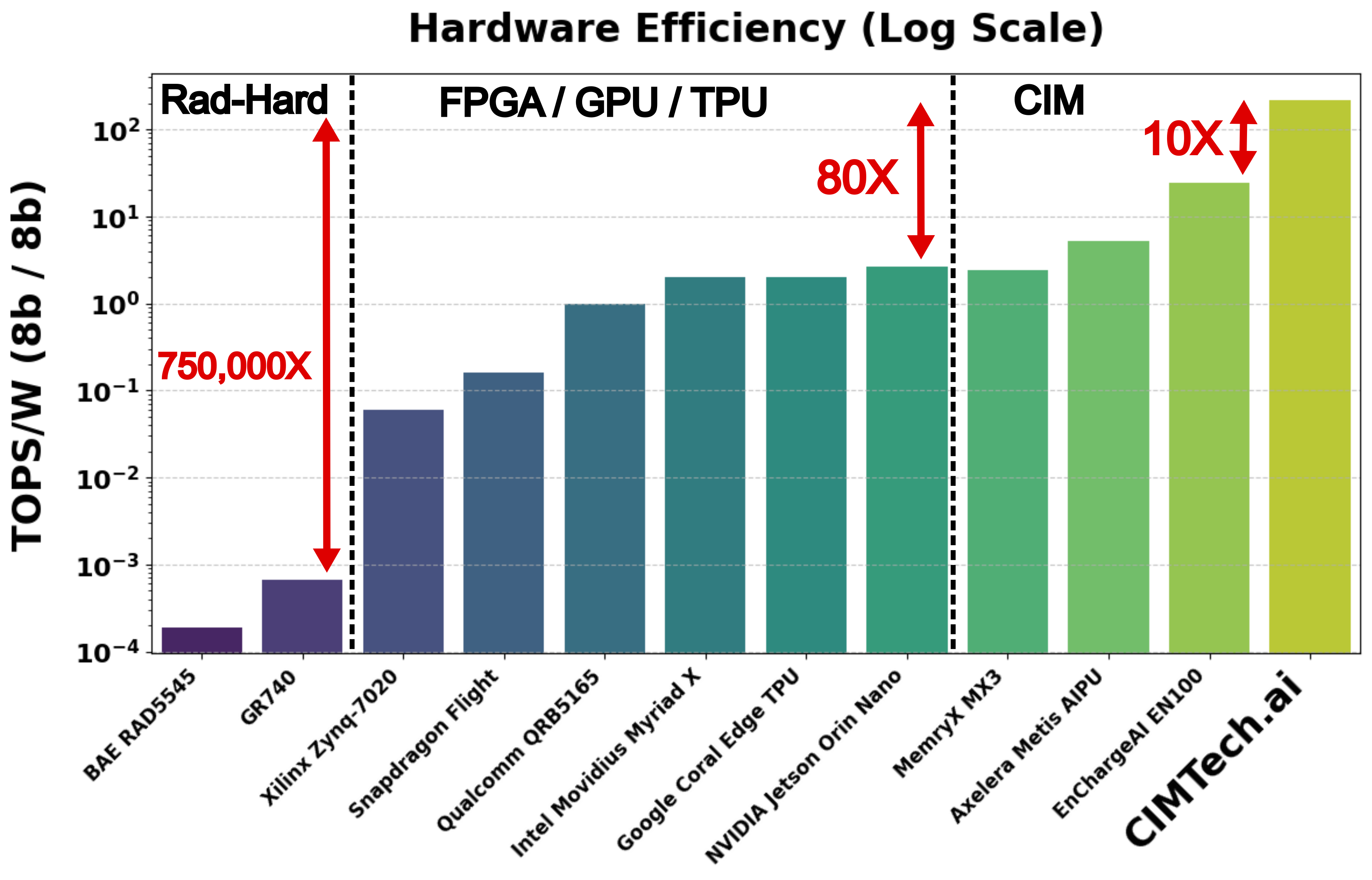

We need sustainable computing for AI

Bottlenecked by memory wall (<10 TOPS/W)

No bottleneck (200+ TOPS/W)

Can fit entire model in CIM

Compute-in-Memory (CIM)

Non-volatile memory (NVM)

Breakthrough system design

First product will fit in the palm of your hand and use <1 W

Future products will leverage advanced packaging to scale beyond today's limits

Moore's law is dying. We're unlocking new scaling laws

| Technology | Companies | Power Consumption | Key Limitation |

|---|---|---|---|

| GPU/TPU | NVIDIA, AMD, Google | High power (~1,000 W) | Efficiency blocked by memory wall |

| FPGA | Xilinx, Altera | Medium power (~100 W) | Not optimized for AI |

| Digital CIM | MemryX, D-Matrix | Low power (~10 W) | Low capacity - will hit the memory wall |

| Analog CIM | EnChargeAI, Mythic, Sagence | Low power (~5 W) | A/D conversion bottleneck & inference only |

Providing high-impact solutions from edge to datacenter

Real-time processing in low-network, multi-platform environments

Smart sensors, 5G networks, wearables, infrastructure

Local LLMs, AI Agents, AI PCs, Servers, Data Centers

Our hardware's efficiency is necessary to achieve AGI